Explore massive datasets with the Hyperparam AI tool

Meet the Hyperparam AI tool for massive datasets

The first-of-its-kind interactive UI for navigating and improving LLM-scale datasets

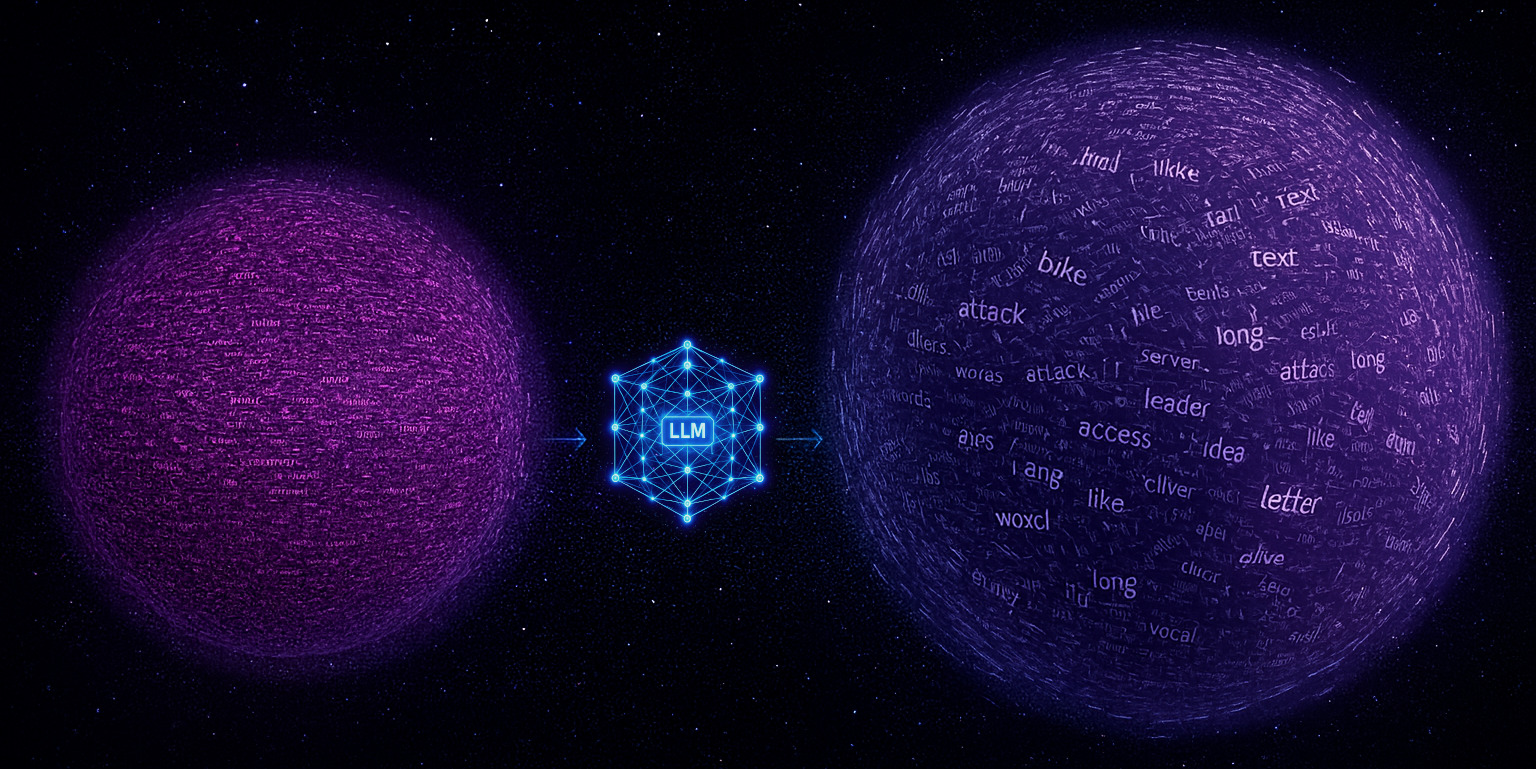

AI runs on data. Massive amounts of it. On one side you’re training models on large amounts of text, and once deployed, these models constantly produce mountains of AI text data. The entire lifecycle of AI is massive data in and even more data out. Between April 2024 and April 2025, Google’s AI products alone went from roughly 9.7 trillion tokens to more than 480 trillion tokens. That’s almost a 50x increase in just one year and rapidly approaching 1 quadrillion tokens per month.

However, none of the tools that currently exist are built to work with massive, planet-sized balls of unstructured text. Notebooks, SQL engines, and data visualizers all assume something smaller and more structured than what we actually deal with today.

If we want to keep advancing with AI, we need solutions that let us explore and understand AI data at the speed at which it’s produced. And that’s why Hyperparam exists. The Hyperparam AI tool, a browser-native application built specifically for this environment, lets you explore and transform massive datasets in real time so you can understand and improve your AI datasets.

Key takeaways

- AI-scale datasets grow faster than traditional tools can handle, leaving teams unable to understand their own data.

- The Hyperparam AI tool pairs a high-speed browser engine with an army of AI agents and natural language analysis to make AI-scale data workable.

- You can explore and refine massive unstructured datasets in real time without waiting.

- One person can triage issues like sycophancy or hallucinations across tens of thousands of rows inside a single browser tab.

Table of contents

- Teams are drowning in overwhelming amounts of unstructured data

- AI is only useful when the UI can keep up

- How the Hyperparam AI agents accelerate real data work

- Hyperparam keeps the human in the loop

Teams are drowning in overwhelming amounts of unstructured data

Every company building with AI now sits on more text than any team can realistically examine. Chat logs, model outputs, product interactions, and support conversations all contain valuable intelligence on how a company’s AI is performing.

But AI data accumulates faster than humans can review or understand. In Q3 2025 alone, Azure’s AI services processed over 100 trillion tokens. Even small teams wind up with tens of thousands of rows overnight, and the rate of growth only accelerates as AI proliferates across more companies and industries.

Traditional tools to help businesses understand their data often rely heavily on the data being structured and accessed via SQL or other structured query languages. But the “signals” in AI data — e.g. did the model hallucinate, did the model ask for clarification, did the user get frustrated — exist fuzzily in text, not in an easy-to-access column. The information to learn from is in the data, but there is no way with traditional tools to access it for any kind of dataset analysis or debugging.

With the pace of AI, that gap only compounds. The more data you produce, the less equipped you are to do anything meaningful with it. The result is a backlog of unknowns that keeps growing while your ability to understand it stays flat.

AI is only useful when the UI can keep up

Ironically, our hypothesis is that AI can help you understand your AI data, but only if the interface makes that possible. AI models can fuzzily extract information, transform, label, and filter for you, but none of that matters when the surrounding tools choke the moment you hit real-world dataset volumes. For example, ChatGPT can help you understand if your AI is hallucinating, but you can’t load more than a few dozen chat logs at a time. Traditional data viewers, even augmented with AI, can’t display more than a few thousand rows instantly. Custom notebooks could be built to use AI, but would require scalable infrastructure to run over the entire data.

The Hyperparam AI tool solves this problem. It’s the first tool that makes AI usable at dataset scale by pairing two things that have never existed side by side:

- Browser-native speed that streams and renders massive unstructured datasets instantly

- A host of AI agents that act like a Swiss Army knife for your data, enabling you to score, label, categorize, and filter rows using natural language

Because the interface is fast enough to keep up, the AI insights become actionable. You can generate columns, score for sentiment, and filter results in real time, all without waiting or guessing. In short, everything clicks into place: the combination of a high-speed UI and Hyperparam’s AI agents gives us the first tool designed to explore and understand AI-scale data and support real LLM dataset debugging.

How the Hyperparam AI agents accelerate real data work

Once the interface is fast enough to keep up with the data, the AI layer turns into a genuine workflow upgrade. The browser engine handles the scale, the model does the hard work of reading through the thousands of rows of text data, and you stay in charge of the decisions. The model scores every row, creates new columns, surfaces issues, and points out strange behavior you might not notice on your own. You explore and validate the results in real time because nothing stalls or blocks you.

Take something as simple as triaging chatbot sycophancy and releasing a new prompt to correct sycophantic behavior. In the Hyperparam chat, you can ask Hyperparam to score every conversation for sycophancy, sort the entire dataset, filter to the outliers, and transform sycophantic results into desired behaviors for evaluations. Then you can try out different prompts, check the responses, and iterate until you have a prompt performing well on your corrected evaluation. You can even export this evaluation to use it later. And you can do this all singlehandedly inside one browser tab.

The Hyperparam AI tool keeps the human in the loop

Large language models can help score conversations or pinpoint odd behavior, but they can’t work through AI-scale datasets on their own. Hyperparam overcomes that limitation by pairing a high-speed browser engine with an army of AI agents that support the parts of the workflow where natural language actually adds value. You move through the data instantly, and the model helps you understand what you’re seeing without ever taking over the decisions.

This setup keeps the judgment where it belongs: with you, the human expert. We believe strongly that human-in-the-loop is the only way to work responsibly with AI. You decide how far to trust a score or when a prompt needs refinement. The UI makes the dataset feel lightweight and the AI does the heavy lifting, but every decision runs through your expert eye.

If you work with AI data, try the Hyperparam AI tool for a faster way to inspect, debug, and refine massive datasets. It’s free while it’s in beta.